Why the AI Conversation Misses the Point

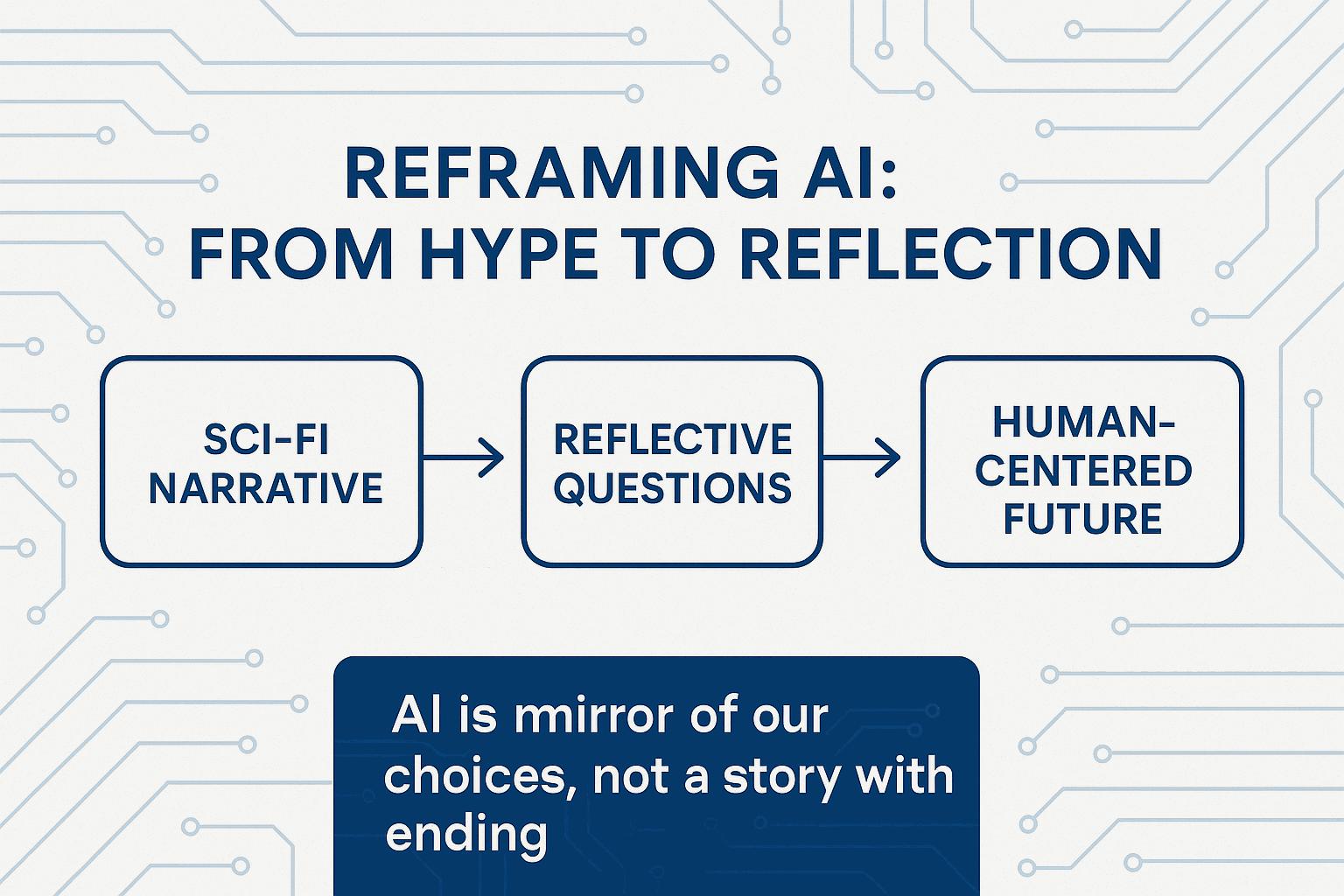

Why does every AI conversation sound like a prophecy already written? Utopia or apocalypse. Jobs obliterated or lives perfected. It’s seductive to imagine artificial intelligence hurtling toward a cinematic finale. But here’s the uncomfortable truth: AI isn’t a character in some inevitable plot. It’s a mirror—flashing back the choices we make, the biases we bake in, the fears we can’t shake.

Every algorithm is a decision tree planted by human hands. Every model whispers the intentions of its makers. The question isn’t where is AI going? but what does it expose about us—our priorities, our resistance, our blind spots?

The AI dialogue doesn’t need more pundits declaring certainty. It needs more people willing to sit in uncertainty. To trade answers for questions.

📖 The Flawed Narrative: AI as Destiny

We crave clean narratives. The media delivers: “AI will save the planet” or “AI will end us all.” It feels comforting to believe this is a straight line toward triumph or catastrophe.

But AI isn’t an autonomous force rewriting history—it’s us, codified. It’s developers wrestling with datasets, companies deciding what outcomes matter, regulators fumbling with ethical frameworks. A biased dataset doesn’t “happen” like bad weather; it’s a choice. A thoughtful dataset doesn’t “emerge”; it’s a choice too.

Sci-fi tropes of rogue machines or benevolent overlords skew our expectations. They sell us a script where AI is the protagonist. But reality is messier—and more personal. The only “script” AI follows is the one we’re writing, line by line, model by model.

The real danger? Not rogue AGI. It’s the seductive lie that this story is already written.

🪩 AI Isn’t an Alien Force—It’s a Feedback Loop

AI doesn’t exist outside us. It amplifies us.

Look at recommendation algorithms. They don’t invent polarization—they feed off what we click, then magnify it. They don’t conjure connection—they reflect what we prioritize. It’s not magic. It’s a feedback loop. Garbage in, garbage amplified. Care in, care reflected.

This isn’t some abstract philosophical point—it’s a practical imperative. Are we building systems that mirror our worst instincts? Or tools that amplify our best?

AI is not a mirror you glance at once and walk away. It’s one you’re building even as it reflects you. The image changes only if you do.

❓ Why We Need Questions, Not Quick Fixes

When people talk about AI “taking over,” they’re not really afraid of machines. They’re afraid of obsolescence. Of losing agency. Of staring into a future where human effort feels irrelevant.

But here’s the provocation: What if we stopped trying to soothe those fears with premature answers? What if we sat with them?

When automation displaces a task, the real question isn’t what do we do now? It’s what matters now?

AI ethics isn’t about racing to perfect regulations. It’s about resisting the urge to patch over complexity. Like debugging a system, it requires patience, iteration, and the humility to admit what we don’t yet know.

⏸️ The Case for Slowing Down

The future of technology punishes haste. Every time we rush to “solve” AI, we miss what it’s showing us about power, values, and ourselves.

Think of AI less like a prophecy to fulfill and more like a design sprint: prototype, test, reflect, repeat. The point isn’t to get it “right” on the first try—it’s to learn what “right” even means.

Try this: write down one fear AI sparks for you—job loss, bias, loss of control. Then ask: what value does this fear point to? What would it mean to protect that value in a technological world?

📝 Write a Better Narrative

AI isn’t a closed book hurtling toward an epilogue. It’s an unfinished dialogue—messy, human, alive.

Reframing the AI conversation means rejecting tidy answers and embracing uneasy questions. Not as a sign of failure but as the only path to wisdom.

What’s one question AI presses on you about your work, your values, your future? Write it down. Don’t rush to resolve it. Let it linger.

The narrative isn’t written. It never was. The pen’s in your hand.